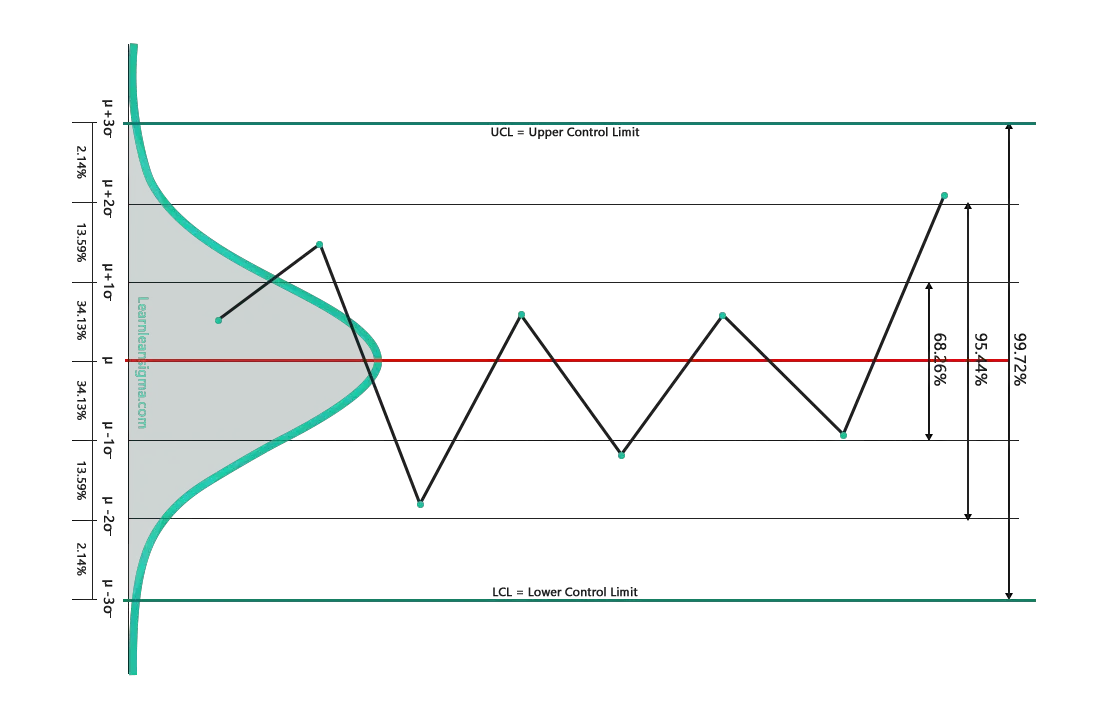

- Because the area under the curve equals one, the total probability of all conceivable outcomes is one.

- The mean, median, and mode are all the same.

- Asymptotically, the curve approaches but never reaches the x-axis.

- µ is the greek letter for “mu” and is used to denote the mean

- σ is the symbol for sigma or standard deviations

- In a normal distribution Bell curve

- 99.72% of data points fall between -3 standard deviations and +3 standard deviations from the mean

- 95.44% of data points fall between +2 standard deviation and -2 standard deviations from the mean

- 68.28% of data points fall between +1 standard deviation and -1 standard deviation from the mean.

The normal distribution is widely used in statistics and probability because it can approximate many real-world phenomena, such as the distribution of human heights and weights, and the distribution of errors in measurements. It is also often used as a reference distribution to compare other distributions against.

In SPC, the normal distribution is used to understand and analyse process data, to check if it follows a normal distribution or not.

In SPC, standard deviation is used to calculate control limits for control charts and also used in process capability analysis. Understanding the standard deviation and the range of +1 and -1 standard deviation from the mean is important in order to interpret the results of control charts and make informed decisions on how to improve a process.

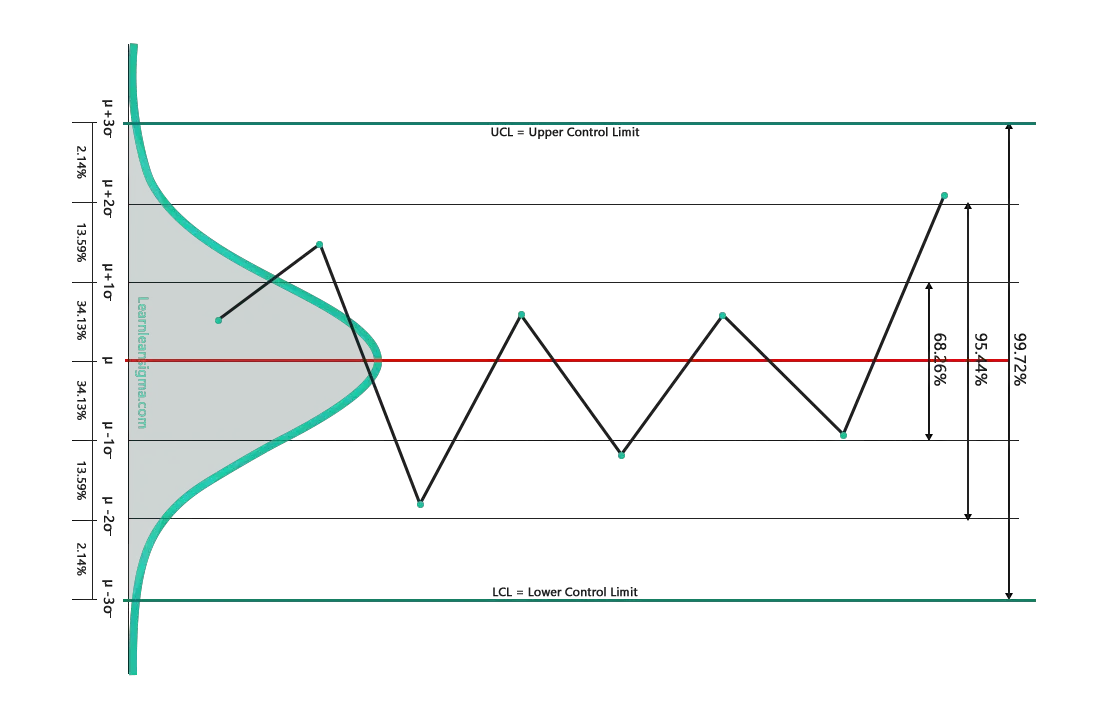

If we rotate the bell curve 90 degres clockwise and add a line graph onto it, you can see the normal distribution of data points. Example image below. However, we will cover control charts later in the course, but this is to help you visualise the standard deviation of data points and control charts.

- The UCL is the Upper Control Limit and is always + 3 standard deviations from the mean

- The LCL is the Lower Control Limit and is always – 3 standard deviations from the mean.

- All data points within the UCL and LCL is classed as common cause variation as it is statistically likely and accounts for 99.72% of all data points.

- Any data points outside the UCL and LCL is classed as special cause variation as it is statistically unlikely and will only occur around 2.8 times in 1000 data points. This is so rare that any data points that fall outside the control limits should be explored to understand why it happened.

- The data itself is what sets the control limits so if the input data changes so too will the control limits.

When we refer to +1 or -1 standard deviations from the mean, we are referring to a range of values that are either 1 standard deviation above or 1 standard deviation below the mean. For example, if the mean of a set of data is 50 and the standard deviation is 5, then +1 standard deviation from the mean would be 55 and -1 standard deviation from the mean would be 45. Therefore 68.26% of all data points will fall between 55 and 45.

The spread of data

In statistics, standard deviation is a measure of a data set’s spread. It indicates how far the data in a set deviates from the mean. The standard deviation is computed by squaring the difference between each data point and the mean, adding the sum, and then taking the square root of that amount.

Standard deviation is used in the context of Statistical Process Control (SPC) to find trends in a data collection and assess if the variation in the data is due to a common cause or a special reason.

Common cause variation is the inherent variation that is always present in a process and is a result of the natural variation of the process. It is also known as random variation and is caused by factors that are hard to control or measure, such as measurement error. Common cause variation is usually stable and predictable and follows a normal distribution.

Special cause variation, on the other hand, is caused by specific factors that are not inherent in the process. It is also known as assignable variation and is caused by factors that can be controlled or measured, such as changes in equipment or staff. Special cause variation is usually unstable and unpredictable and does not follow a normal distribution.

If the data has a normal distribution and the standard deviation is within the permitted range, it is deemed common cause variation and no further action is required in SPC. However, if the data does not follow a normal distribution and the standard deviation is outside the permitted range, it is deemed special cause variation and requires further research to determine the cause and make appropriate process improvements.

In summary, standard deviation is a measure of a data set’s spread, and it is used in SPC to find patterns in a data set and assess if the variation in the data is due to a common or special source. Understanding the distinction between common and special cause variation is critical for identifying the source of variability and making appropriate process modifications.